What is Varnish and how does it work?

Varnish is an HTTP reverse proxy used to speed up websites. A reverse proxy is a proxy that appears to clients as the principal server. By keeping web pages in cache (ram or file), Varnish responds to the visitor without going to the actual server by returning the same response if the response was previously requested when the client makes a request. In this way, situations that tire the server or take time such as database operations, backend requests, 3rd party requests are reduced.

It directs visitors to cached static pages instead of dynamic pages that are recreated on each visit and ensures that the site opens quickly.

Let's say we have a page that gets heavy traffic and pulls the data it contains from the database. Each database operation runs its own process. After a while, this will increase the bandwidth usage on the server side. In parallel with this, since the RAM and CPU ratios will increase, the response time of the web server will also increase and as a result, it will become unresponsive. Varnish helps us at this point. How Does? Let our traffic and transaction load continue. When this page is wanted to be displayed; Varnish forwards the request to the server as it is not in its memory. The server returns a reply. This returned response is kept in the cache for the specified time. The same request will continue to be answered over the cached varnish layer, not through the database, regardless of the user for the time we have specified. When the time expires, that is, there is no data in the varnish cache (invalid), it requests it from the server again.

If the cache duration is determined as 1 minute on a page that receives 1,000 requests per minute, the server will only be dealing with 1 request instead of 1,000.

VCL (Varnish Configuration Language)

Varnish uses the configuration language called VCL(Varnish Configuration Language). You transfer to Varnish how to handle your HTTP traffic via VCL. It contains methods that determine according to which rule incoming requests will be cached, how long this cache will take and how it will be invalidated.

Every incoming request flows through Varnish, and you can change how the request is handled by changing the VCL code. You can forward certain requests to the specified service. You can have Varnish perform various actions on the request or response. These situations make Varnish an extremely powerful HTTP processor, not just a caching tool.

This configuration file is converted to C code and compiled into a binary file. This makes Varnish run much faster. In addition, C code can be used directly in the VCL file.

VCL files are organized into subroutines. Different subroutines are executed at different times. E.g; One is executed when we receive the request and the other is executed when files are fetched from the backend server.

You can check the VCL Document for VCL Syntax, subroutines, actions and many other features.

Varnish vs Memcached

- What is memcached? It is a high performance, distributed, in-memory data store. Memcached is an in-memory key-value store for small chunks of random data (strings, objects) from database calls, API calls, or page rendering results. This will make Memcached faster, but will also require allocating memory for its storage. The fact that Memcached is distributed means it runs on multiple servers. It resides in memory at the server layer of the application.

Fast object cache, High-performance, Stable

- What is Varnish? A high-performance HTTP accelerator. Varnish Cache is a web application accelerator that caches HTTP requests with reverse proxy. It is installed in front of an HTTP communicating server and is configured to cache content. It's pretty fast compared to its counterparts. Depending on your architecture, it speeds up the transfer, usually by a factor of 300–1000x. Does not go into the server layer. Varnish is in front of the web server; It works as a caching reverse http proxy. The reverse proxy part means it is located between your application and the outside world.

Fast object cache, Very Fast, Very Stable

Generally, Varnish works for unauthenticated (incoming cookie) traffic, and memcached caches authenticated traffic. So both can be used together.

Setup

Ubuntu/Debian users:

sudo apt-get install varnish

You can simply install it with apt-get. Varnish listens on port 6081 by default. If you want it to listen on port 80, you can change the “DAEMON_OPTS” directive in the /etc/default/varnish file.

Varnish's default configuration file is also located at /etc/varnish/default.vcl. Here, it is designed to receive data from the backend running on the 8080 port by default. If you run your web server on port 8080 and set varnish to run on port 80 from DAEMON_OPTS above, requests to your server will come to Varnish first, and if no cache is found there, they will land on your web server (backend).

Varnish settings are defined by VCL(Varnish Configuration Language). With VCL, there are methods in the settings files that determine the backends to be used, the directors who will distribute the load to these backends, the rules according to which incoming requests will be cached, and how this cache will be invalidated. In our scenario, we will make our definitions within the vcl_recv and vcl_fetch methods.

After this configuration in the VCL File below, varnish will start caching all requests without the "X-Requested-With" header (the condition we set.) for 300s.

# requests will go to our web server running on port 8080.

backend default {

.host="127.0.0.1";

.port="8080";

}

# invoked at request start

# here, the request can be manipulated,

# eg: a header can be optionally added

sub vcl\_recv{

# Do not cache AJAX requests,

# most libraries add a header like this

# if this header exists, don't cache it

if(req.http.X-Requested-With){

return(pass);

}

# cache all remaining requests

return(lookup);

}

# invoked when request is fetch from backend

sub vcl\_fetch{

# If returning 200, cache the content for 300 seconds

if(beresp.status==200){

set beresp.ttl=300 s;

return(deliver);

}

}

Application

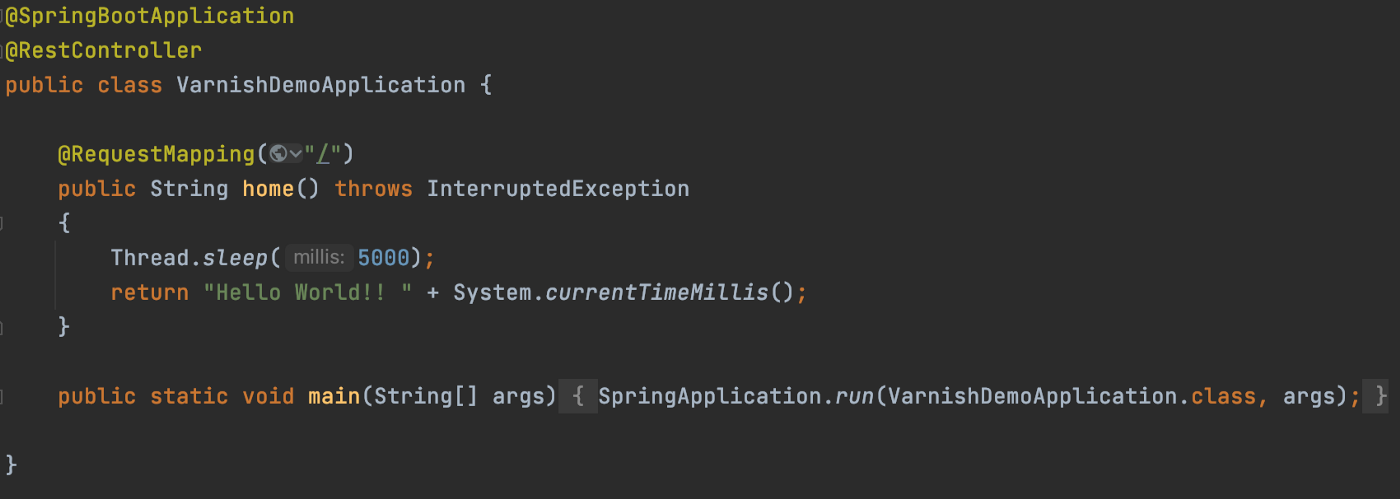

With a simple Spring Boot application, we create an environment where each request takes about 5 seconds (Thread.sleep(5000)). (It's okay if it looks like a tiring system 😋)

I upgraded the non-varnish version of the project on port :8080 and the version with varnish on port :8000

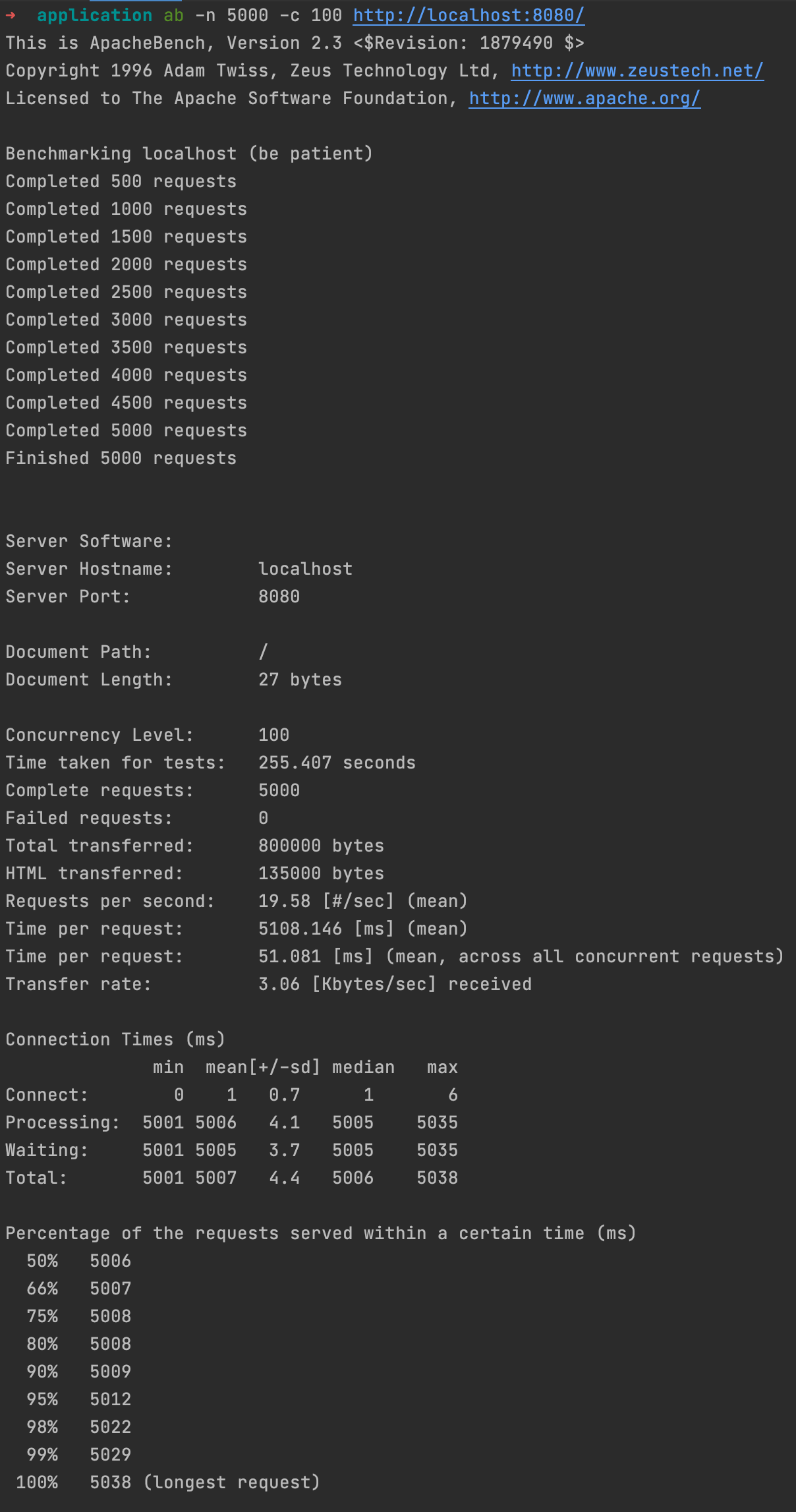

We have a page that seems to be overloaded while loading the page. We are testing the page load with Apache Benchmark. I am sending 5000 (-n 5000) requests from 100 (-c 100) parallel resources to our service.

$ ab -n 5000 -c 100 http://localhost/8080/

5,000 Complete requests took 255,407 seconds (Time taken for tests). This means that we were able to respond to a total of 19.53 requests (Requests per second) per second.

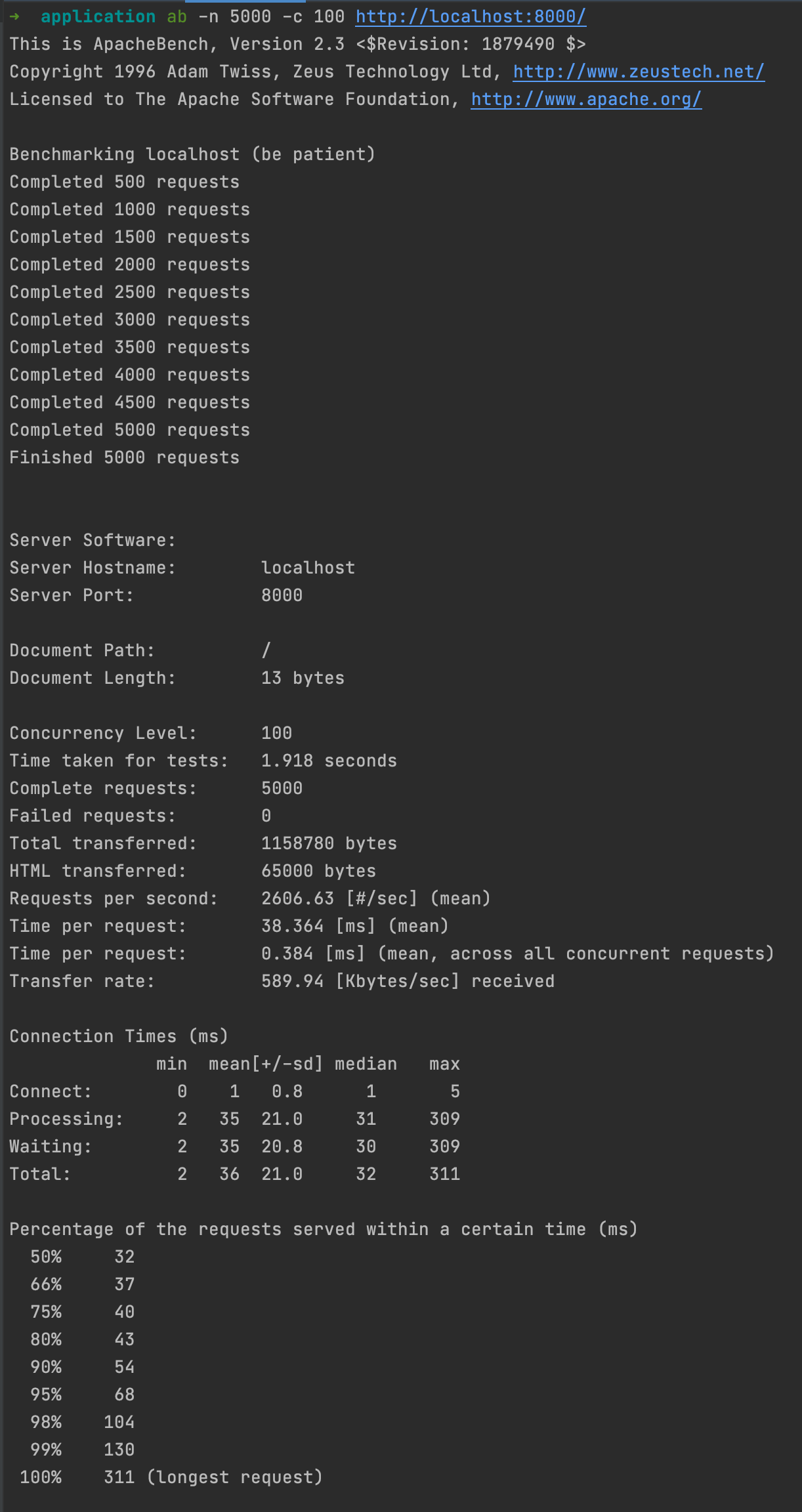

Let's try it with Varnish:

$ ab -n 5000 -c 100 http://localhost/8000/

This time, 5,000 Complete requests returned in 1.918 seconds (Time taken for tests)! And we have a server that can respond to 2.606.63 requests per second.

In the environment without Varnish, it can respond to 19.53 requests per second, while 2.606.63 requests per second can be responded to in a server using Varnish. This means that we have a profit of more than 100 times. Roughly, it means that we were able to do the work of 100 servers on a single server.

You can access the codes for this application from my varnish-caching repo.